Cohere

Founded Year

2019Stage

Series D - II | AliveTotal Raised

$971.25MValuation

$0000Last Raised

$50M | 2 mos agoRevenue

$0000Mosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

-7 points in the past 30 days

About Cohere

Cohere operates as a natural language processing (NLP) company. It offers services that enable businesses to integrate artificial intelligence (AI) into products, with capabilities such as generating text for product descriptions, blog posts, and articles, understanding the meaning of text for search and content moderation, and creating summaries of text and documents. It primarily serves the enterprise sector, providing AI solutions that can be customized to suit various use cases, domains, or industries. It was founded in 2019 and is based in Toronto, Canada.

Loading...

Cohere's Product Videos

ESPs containing Cohere

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

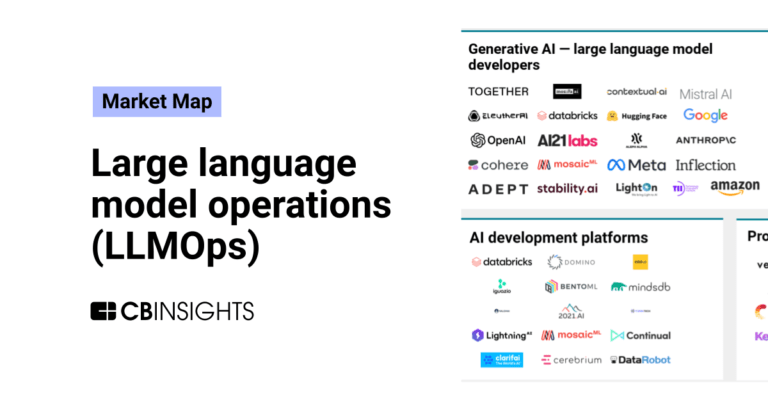

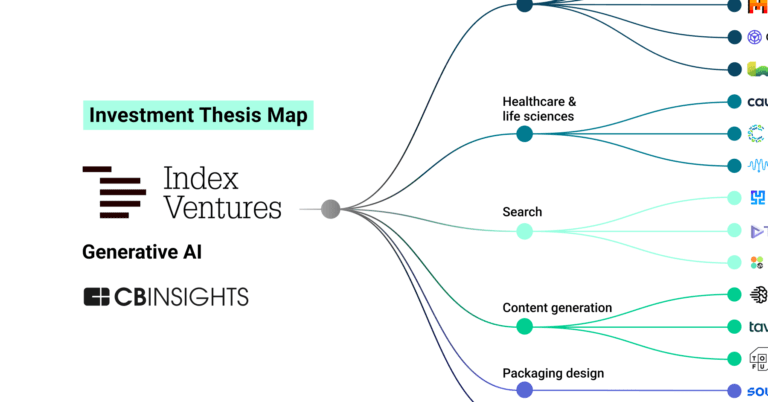

The generative AI — large language model (LLM) developers market offers foundation models and APIs that enable enterprises to build natural language processing applications for a number of functions. These include content creation, summarization, classification, chat, sentiment analysis, and more. Enterprises can fine-tune and customize these large-scale language models — which are pre-trained on …

Cohere named as Outperformer among 15 other companies, including Google, IBM, and OpenAI.

Cohere's Products & Differentiators

Classify

Access massive language models that can understand text and take appropriate action — like highlight a post that violates your community guidelines, or trigger accurate chatbot responses. Classify uses cutting-edge machine learning to analyze and bucket text into specific categories. Build automated text classifiers into your application to do things like identify toxic language, automatically route customer queries, or detect breaking trends in product reviews.

Loading...

Research containing Cohere

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Cohere in 15 CB Insights research briefs, most recently on Jul 31, 2024.

Feb 27, 2024

The generative AI boom in 6 charts

Aug 16, 2023 report

State of AI Q2’23 Report

Jul 14, 2023

The state of LLM developers in 6 chartsExpert Collections containing Cohere

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Cohere is included in 6 Expert Collections, including Unicorns- Billion Dollar Startups.

Unicorns- Billion Dollar Startups

1,244 items

Artificial Intelligence

14,767 items

Companies developing artificial intelligence solutions, including cross-industry applications, industry-specific products, and AI infrastructure solutions.

Digital Content & Synthetic Media

2,266 items

The Synthetic Media collection includes companies that use artificial intelligence to generate, edit, or enable digital content under all forms, including images, videos, audio, and text, among others.

AI 100

200 items

Generative AI 50

50 items

CB Insights' list of the 50 most promising private generative AI companies across the globe.

Generative AI

863 items

Companies working on generative AI applications and infrastructure.

Cohere Patents

Cohere has filed 8 patents.

The 3 most popular patent topics include:

- radio resource management

- wireless networking

- channel access methods

Application Date | Grant Date | Title | Related Topics | Status |

|---|---|---|---|---|

1/27/2021 | 8/27/2024 | Information theory, Telecommunication theory, Wireless networking, Radio resource management, Channel access methods | Grant |

Application Date | 1/27/2021 |

|---|---|

Grant Date | 8/27/2024 |

Title | |

Related Topics | Information theory, Telecommunication theory, Wireless networking, Radio resource management, Channel access methods |

Status | Grant |

Latest Cohere News

Sep 20, 2024

Cloud Data Architect - AI/ML Specialist at DoiT International DoiT International’s Eduardo Mota offers insight on large language model fundaments and leveraging AWS. This article originally appeared on Solutions Review’s Insight Jam , an enterprise IT community enabling the human conversation on AI. Large language models (LLMs) have become all the rage. Talk to anyone working with artificial intelligence (AI) and you’ll likely hear a new universe of names, including Claude, Cohere and Llama2. Setting aside the hype, what exactly are LLMs? How are they used to create powerful AI applications? Are there different types? In the wake of the LLM explosion, many folks are understandably playing catch up. In the following, we’ll look at the fundamentals of LLMs and address some common questions. Further, you’ll find some tips on how to use them effectively with the world’s largest cloud provider, Amazon Web Services (AWS). How do LLMs work? LLMs are neural networks trained on a mass of data to understand and generate human language. These networks are made up of layers of interconnected neurons, each receiving input signals from others. These are processed through an activation function and then output signals are sent onto the next layer. The connections between nodes have adjustable weights, referred to as parameters. And in the case of LLMs, we’re talking about billions to trillions of parameters, used to identify and map complex patterns like human language. LLMs learn to match patterns involving words and sentences. The neurons and connections between them are able to create very sophisticated language structures and patterns. The end result is a model so laden with textual data that it can continue sentences, respond to questions, summarize content and more. Basically, it matches the patterns you give it with those it learned during training. Still, as impressive as LLMs can be, they lack real reasoning and comprehension. They work from probability, not actual knowledge. Yet, with the proper prompts and tuning, they can simulate comprehension so long as the domains and tasks are very focused. Are There Different Types of LLMs? Right now, there are primarily three types of LLM models, each with a unique approach. They are: Autoregressive: These try to predict the first or final word in a sentence, with context surfacing from previous words. These are best for text generation, though they’re also good for classification and summarization. Autoregressive models are being hailed lately for their ability to generate content. Autoencoding: This type of model is trained on text with missing words, enabling it to learn context to then predict information that’s missing. These models beat autoregressive ones when it comes to understanding context, but they don’t generate text reliably. On the upside, autoencoding models are not as compute-intensive and have proven very effective for summarizing and classification. Seq2Seq: This text-to-text model combines the approaches of both autoregressive and autoencoding. As a result, they’re particularly effective when it comes to text summarization and translation. How Do LLMs Work with AWS? Cloud providers have resources in place to help construct LLMs. As an example, we’ll look at the LLM-related services of AWS, robust offerings enabling the creation of LLM-powered apps without training from scratch. First up is Amazon Bedrock, a fully managed service that simplifies the creation and scaling of gen AI apps. It provides access to an array of foundation models through a single API. These come from top AI companies like Anthropic, Cohere, Meta, Mistral AI and more. Key features include: Choice and Customization: Allows users to choose from a variety of foundation models to find the best fit. They can also be customized with user data, allowing for personalized and domain-specific applications. Serverless Experience: A serverless architecture eliminates the need for users to manage infrastructure. It also enables easy integration and deployment of AI into apps with familiar AWS tools. Security and Privacy: Bedrock ensures security and privacy, while strongly adhering to AI principles. Users can also keep their data private and secure working with advanced AI capabilities. Knowledge Base: Lets architects enhance AI apps with retrieval augmented generation (RAG) techniques. It aggregates various data sources into a central repository so models and agents are current while returning context-specific and accurate responses. It supports seamless integration with widely used storage databases, so RAG can be implemented without managing complex, underlying infrastructure. Agents: Architects can build and configure autonomous agents within apps. This makes it easier for end-users to complete actions with data and user input. Agents orchestrate model interactions, data sources, software apps and conversations. They also automatically call APIs and leverage knowledge bases to greatly reduce development efforts. Next is Amazon SageMaker JumpStart, a machine-learning hub offering pre-trained models and solution templates for various problem scenarios. It allows for incremental training and makes it easy to deploy, fine-tune and try popular models in the infrastructure of your choice. These ready-made solutions save a lot of development time. Finally, there’s Amazon Q. This GenAI-powered assistant focuses on business needs and allows users to make customizations for specific apps. It’s gained a reputation as a versatile tool, facilitating the creation, operation and understanding of apps and workloads. Knowledge is Key Understanding LLMs, how they work and what they are capable of is critical for successful project outcomes. Not only that, teams must be aware of the available tools and which are best suited to work with their data sets. If you lack the in-house expertise, consult with a knowledgeable partner. Working with LLMs requires enormous resources and compute costs can quickly get out of hand without moving the project closer to success. But a little understanding will go a long way to getting across the finish line without breaking the bank. Share This Tags

Cohere Frequently Asked Questions (FAQ)

When was Cohere founded?

Cohere was founded in 2019.

Where is Cohere's headquarters?

Cohere's headquarters is located at 171 John Street, Toronto.

What is Cohere's latest funding round?

Cohere's latest funding round is Series D - II.

How much did Cohere raise?

Cohere raised a total of $971.25M.

Who are the investors of Cohere?

Investors of Cohere include Salesforce Ventures, NVIDIA, Oracle, Public Sector Pension Investment Board, Export Development Canada and 22 more.

Who are Cohere's competitors?

Competitors of Cohere include Sakana AI, Lyzr, Vectara, Argilla, Mistral AI and 7 more.

What products does Cohere offer?

Cohere's products include Classify and 2 more.

Loading...

Compare Cohere to Competitors

AI21 Labs operates as an artificial intelligence (AI) lab and product company. The company offers a range of AI-powered tools, including a writing companion tool to assist users in rephrasing their writing, and an AI reader that summarizes long documents. It also provides language models for developers to create AI-powered applications. It was founded in 2017 and is based in Tel Aviv-Yafo, Israel.

01.AI provides open-source AI models and applications that support human productivity. Its offerings include open-source and proprietary language models capable of processing text in multiple languages, with a particular emphasis on English and Chinese. 01.AI primarily serves the technology and platform development sectors with a vision of integrating AI. The company was founded in 2023 and is based in Haidian, China.

Anthropic is an AI safety and research company that specializes in developing advanced AI systems. The company's main offerings include AI research and products that prioritize safety, with a focus on creating conversational AI assistants for enterprise use. Anthropic primarily serves sectors that require reliable and interpretable AI technology. It was founded in 2021 and is based in San Francisco, California.

Hugging Face focuses on advancing artificial intelligence through collaboration in the technology sector. It provides a platform for machine learning professionals to build, share, and collaborate on models, datasets, and applications. The company offers solutions that cater to various modalities, including text, image, video, audio, and 3D, as well as enterprise-grade services for teams requiring advanced AI tooling with enhanced security and support. It was founded in 2016 and is based in Paris, France.

LangChain specializes in the development of large language model (LLM) applications, providing a suite of products that support developers throughout the application lifecycle. It offers a framework for building context-aware, reasoning applications, tools for debugging, testing, and monitoring app performance, and solutions for deploying APIs with ease. It was founded in 2022 and is based in San Francisco, California.

One AI is a company that specializes in generative artificial intelligence (AI) within the technology sector. The company offers services such as language analytics, customizable AI skills, and the processing of text, audio, and video data into structured, actionable insights. It primarily serves sectors such as customer service, e-commerce, media, healthcare, and government. It was founded in 2021 and is based in Ramat Gan, Israel.

Loading...