Ethyca

Founded Year

2018Stage

Series A - II | AliveTotal Raised

$25.2MLast Raised

$7.5M | 3 yrs agoMosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

-64 points in the past 30 days

About Ethyca

Ethyca specializes in automated data privacy compliance and operates within the data protection and privacy sector. The company offers a suite of tools that automate the majority of data privacy requirements, simplifying compliance for businesses and developers. Ethyca's solutions cater to various sectors including financial services, healthcare, retail & e-commerce, advertising, and data & analytics. It was founded in 2018 and is based in New York, New York.

Loading...

Ethyca's Product Videos

ESPs containing Ethyca

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The consent & preference management market offers solutions to help companies collect, store, and manage customer preferences regarding data usage and communication channels. By ensuring compliance with regulations like GDPR and CCPA, these solutions reduce the risk of costly legal penalties and reputational damage. Moreover, consent & preference management solutions empower customers by providing…

Ethyca named as Challenger among 13 other companies, including OneTrust, BigID, and Osano.

Ethyca's Products & Differentiators

Fides

Open source definition language that allows any developer team to quickly describe the data characteristics of their system

Loading...

Research containing Ethyca

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Ethyca in 2 CB Insights research briefs, most recently on Oct 10, 2023.

Oct 10, 2023

The legal tech market mapExpert Collections containing Ethyca

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

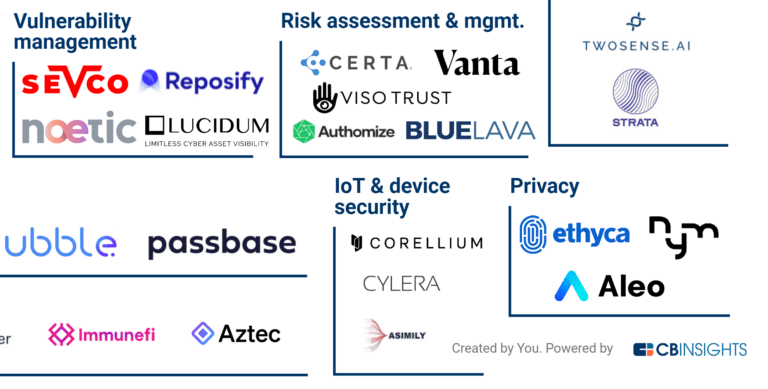

Ethyca is included in 3 Expert Collections, including Cybersecurity.

Cybersecurity

9,914 items

These companies protect organizations from digital threats.

Retail Media Networks

324 items

Tech companies helping retailers build and operate retail media networks. Includes solutions like demand-side platforms, AI-generated content, digital shelf displays, and more.

Defense Tech

1,268 items

Defense tech is a broad field that encompasses everything from weapons systems and equipment to geospatial intelligence and robotics. Company categorization is not mutually exclusive.

Latest Ethyca News

Oct 17, 2023

Data Rights Protocol is Working to Automate the Resolution of Data Rights Requests The U.S. may be late to the party when it comes to codifying data privacy protections into law, but the States doing so have made a flashy entrance. California’s Consumer Protection Act (CCPA) quickly became recognized as any jurisdiction’s most stringent privacy protection. Virginia and Colorado have also gone down the path of putting individuals in control of their data. But the CCPA is the legislation that proactive tech companies are complying with for now – in some cases voluntarily, so they can assure customers they are putting privacy first. The CCPA empowers consumers by allowing them to make requests to companies they deal with and direct how their data can be used or stored. Consumers can direct that their data not be sold to other parties, access their data held by a third party, and request its deletion. “The center point is the individual and all their personal data emanations,” explains Daniel ‘Dazza’ Greenwood, the Data Rights Protocol lead at Consumer Reports Digital Lab. “Data Rights Protocol (DRP) is about giving people ownership over their data. It’s narrowly scoped around rights articulated in existing law and must be complied with in a mandatory way by data holders.” Built out of the Consumer Reports Innovation Lab starting in the summer of 2021, DRP is a project that seeks to move state law from manual requests involving several parties to a technical standard that allows for automated resolution of consumer data requests. It’s translating law into code and publishing it to GitHub, detailing its open-source set of API endpoints that will streamline how organizations respond to consumer data requests. “An important part of the protocol design is that it’s better than what’s being done. No statute says people have to adhere to the law through this protocol; it’s voluntary, and our value proposition is to make it so good that people and companies would choose to use it because it’s faster, cheaper, and better,” Greenwood says. The current system to resolve CCPA requests involves a consumer making a request, the business that receives the request, authorized agents that can act on behalf of consumers to make requests of many different businesses, and software providers that help organizations comply with privacy laws. Authorized agents interact with the privacy software providers to detail how data rights requests are made and received. DRP will standardize that across the industry. It will make the process more repeatable and predictable for organizations, giving them cost certainty for managing the requests. DRP released its first stable release on Sept. 12, with version 0.9. It is working with digital privacy firms OneTrust, Transcend, Incogni, and Ethyca to test and implement the protocol into production. The concept makes sense for organizations keen to comply with the law. But what about those with data broker business models that could see compliance as a threat to profitability? “We’ll know definitively by 2025 and 2026 exactly what the propagation and adoption rates look like, and it’s possible that the companies you refer to as the worst offenders may be the last holdouts,” Greenwood acknowledges. “I still think this would be very worth doing because if the vast majority of companies are doing things the same way, it makes it somewhat easier to look at a small number of worst offenders when it comes time for enforcement.” Greenwood points to Spokeo, a founding member of DRP, as an example of a business model that depends on providing other people’s data to its customers. The website offers people lookup for names, emails, phone, and address information. In the future, DRP could extend its utility beyond California. It will start with the CCPA but could provide the same capability in multiple jurisdictions, including where GDPR is in effect. The protocol can specify the consumer’s geography. That will start as a binary between “California” and “voluntary” but could be updated for future versions. If DRP succeeds, it could play a role in a future where a consumer simply uses an app to assert their data rights and can expect they will be respected. Glaze Provides Artists a Defense Against AI Mimicry Where DRP is designed to convey more efficiently what’s already in law, Glaze is a technology that seeks to help artists who feel they are victims who fall into the gap between cutting-edge technology and legal precedent. The backlash to generative AI’s ability to instantly create content in the unique style of well-known creators includes the lawsuits mentioned earlier in this article. Still, those court cases will take months, if not years, to play out, and there’s no guarantee a judge will decide to protect creators. So, the University of Chicago’s computer science department is creating a tool that visual artists can use to protect their work from being used to train an AI algorithm. Artists looking for a way to fight back against AI began reaching out to Shan because of his lab’s earlier work on the Fawkes Project in 2020. The project created a digital cloak that people could put on their selfies before they uploaded them to social media, which prevented training a facial recognition algorithm. Artists wondered if the cloak would protect their artwork from being used for training. Greg Rutkowski is the most famous example of an artist whose work was mimicked by AI image generators like Stable Diffusion or Midjourney. Rutkowski became the most-used prompt for image generators, resulting in thousands of new images that imitated his hyper-detailed fantasy style of artwork flooding the internet. But he’s not the only one affected by the phenomenon. Comics artist Sarah Anderson was shocked to learn from a fan that her style could be easily replicated with the tools; Karla Ortiz found the same, and a long list of other artists now listed as collaborators with Glaze. Current copyright law wasn’t made with AI’s capabilities in mind, Shan says. “Humans can’t process 2 billion images and replicate that work so well. If we had that ability, we’d have different copyright laws,” he says. “The laws have an average human being in mind. So, we’ll see what happens in the current court cases. Does the current law protect artists, or do we need new laws?” To protect the artists they work with, the Glaze team used the open-source Stable Diffusion model to engineer a new type of cloak that protected the style of artwork. The technique is clever, changing just enough pixels in an image to fool an AI algorithm into interpreting it differently while being practically invisible to the human eye. The AI algorithm will still understand the content of an image but not its style, so if Karla Ortiz draws a dog, the ‘Glazed’ image will look like Vincent Van Gogh drew a dog to AI, for example. “AI sees patterns differently than human beings,” Shan explains. “They see an array of pixels, and that’s true of all AI systems. We try to maximize that gap by adding the smallest changes to our perception but the biggest in the model’s perception.” The Glaze team proved that its work was effective at foiling AI and acceptable to artists in a study. It has since released the tools to be available as an application download and an online tool accessible via a web browser. The application has been downloaded more than 1 million times. While Glaze was developed on Stable Diffusion’s model and other AI image generators use proprietary algorithms and are not available for examination, Shan says that Glaze translates well to protect all models. The team is also trying to anticipate countermeasures. Even if large AI companies accept artists’ wishes to prevent their work from training their algorithms, it is becoming increasingly trivial for anyone to train AI using a small training set of images. So bad actors may wish to remove Glaze from artists’ work they want to mimic. So far, Glaze is holding up to its intended effect. “For students that went to art school and worked for decades to create their own unique style, they deserve a chance to make money from this,” Shan says. If Glaze can level the playing field even a small amount, it will be a victory for artists. What Does it Mean for Technology Leaders? A new chapter in digital sovereignty is being written in the next couple of years as the power dynamics between individuals and organizations – especially those with AI-driven business models – are settled through court decisions and legislation. In the meantime, there is uncertainty about how organizations can maintain control over their intellectual property or whether their reputations will be tarnished if they use AI-created content. Current market dynamics incentivize platforms to rush to stake their claims to user data. In March, Zoom changed its terms of service in a way that appeared to permit it to harvest user data for AI training but later backtracked after users protested. In July, Google updated its privacy policy to allow the company to collect and analyze information people share for AI training, and it altered its policy to state that data scraped by its web crawler could also be used for AI training unless users opt-out. Those with access to valuable data will want to either put a price on it, as coding help website StackOverflow did by charging for its API access, or raise prices as X (formerly known as Twitter) did with its APIs. There may be a price on using data for AI training soon. Meanwhile, technology leaders at organizations must look at data security from a new perspective. Not only must they re-evaluate how sensitive their data is in light of AI training, but they should also get ahead of customer requests regarding personal data. Digital sovereignty is a two-way street and must be asserted and respected. How can you navigate this complex landscape of data privacy and AI copyright effectively? Let us know on FacebookOpens a new window , XOpens a new window , and LinkedInOpens a new window . We’d love to hear from you! Image Source: Shutterstock

Ethyca Frequently Asked Questions (FAQ)

When was Ethyca founded?

Ethyca was founded in 2018.

Where is Ethyca's headquarters?

Ethyca's headquarters is located at 45 W 21st Street, #403, New York.

What is Ethyca's latest funding round?

Ethyca's latest funding round is Series A - II.

How much did Ethyca raise?

Ethyca raised a total of $25.2M.

Who are the investors of Ethyca?

Investors of Ethyca include IA Ventures, Lachy Groom, TABLE Management, Lee Fixel, BBQ Capital and 15 more.

Who are Ethyca's competitors?

Competitors of Ethyca include Transcend, BigID, blindnet, WireWheel, Osano and 7 more.

What products does Ethyca offer?

Ethyca's products include Fides and 2 more.

Who are Ethyca's customers?

Customers of Ethyca include Away, Casper and Logitech.

Loading...

Compare Ethyca to Competitors

TrustArc specializes in data privacy management software and solutions within the privacy sector. The company offers a suite of products designed to automate privacy compliance, manage consumer rights, and ensure data governance for organizations. TrustArc's solutions cater to businesses seeking to navigate and comply with various global privacy regulations and frameworks. TrustArc was formerly known as TRUSTe. It was founded in 1997 and is based in Walnut Creek, California.

Transcend focuses on providing data privacy infrastructure and data governance solutions within the technology sector. Its main offerings include a unified platform for privacy and data governance that simplifies compliance, automates data subject requests, and provides AI governance tools to manage risks associated with large language model deployments. It was founded in 2017 and is based in San Francisco, California.

Osano is a data privacy management platform that specializes in simplifying compliance across various industries. The company offers a suite of tools designed to automate and streamline consent management, data subject rights processing, data mapping, and vendor risk assessments. Osano primarily serves businesses looking to manage their data privacy obligations effectively. It was founded in 2018 and is based in Austin, Texas.

Immuta focuses on data security, operating within the technology and software industry. The company offers a data security platform that provides services such as sensitive data discovery and classification, data access control, and continuous activity monitoring for risk detection. Immuta primarily serves sectors such as financial services, healthcare, the public sector, technology, and the software industry. It was founded in 2015 and is based in Boston, Massachusetts.

BigID specializes in data security, privacy, compliance, and governance within the technology sector. The company offers a unified platform for data visibility and control, providing services such as data discovery and classification, data security posture management, and data privacy management. BigID's solutions cater to various industries including financial services, healthcare, and retail. It was founded in 2016 and is based in New York, New York.

InCountry provides data residency-as-a-service and cross-border data transfers. It develops software development kits (SDKs) in a range of popular programming languages so that companies can access their customer data to build new applications and services. It serves sectors such as automotive, energy, financial services, and much more. The company was founded in 2019 and is based in Wilmington, Delaware.

Loading...