Weights & Biases

Founded Year

2017Stage

Series C - II | AliveTotal Raised

$250MValuation

$0000Last Raised

$50M | 1 yr agoMosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

-87 points in the past 30 days

About Weights & Biases

Weights & Biases operates as a developer-first Machine learning operations (MLOps) platform operating in the machine learning sector. The company offers a suite of tools designed to optimize, visualize, collaborate on, and standardize machine learning models and data pipelines, ensuring reproducibility through tracking of hyperparameters, code, model weights, and dataset versions. Weights & Biases primarily serves the machine learning community across various industries. It was founded in 2017 and is based in San Francisco, California.

Loading...

ESPs containing Weights & Biases

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The prompt engineering market focuses on designing and generating specific prompts for machine learning models. By carefully crafting the input given to a language model, prompt engineering aims to guide the model toward generating accurate outputs that align with the user's intentions. This process involves understanding the strengths and weaknesses of the model and the task at hand. Ultimately, …

Weights & Biases named as Leader among 4 other companies, including Comet, Vellum, and Orq.

Loading...

Research containing Weights & Biases

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Weights & Biases in 5 CB Insights research briefs, most recently on Oct 3, 2023.

Sep 29, 2023

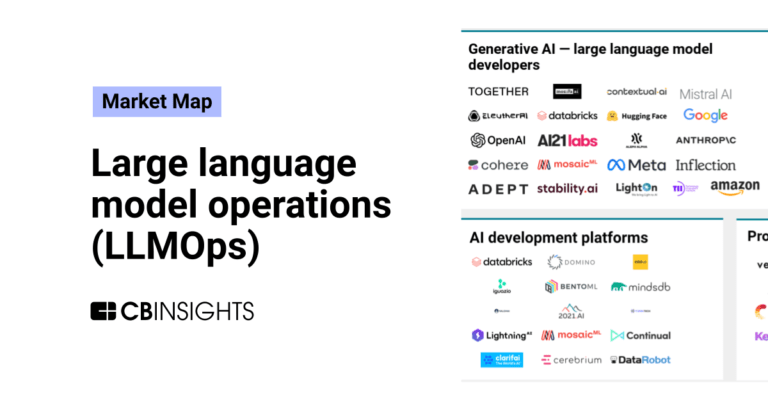

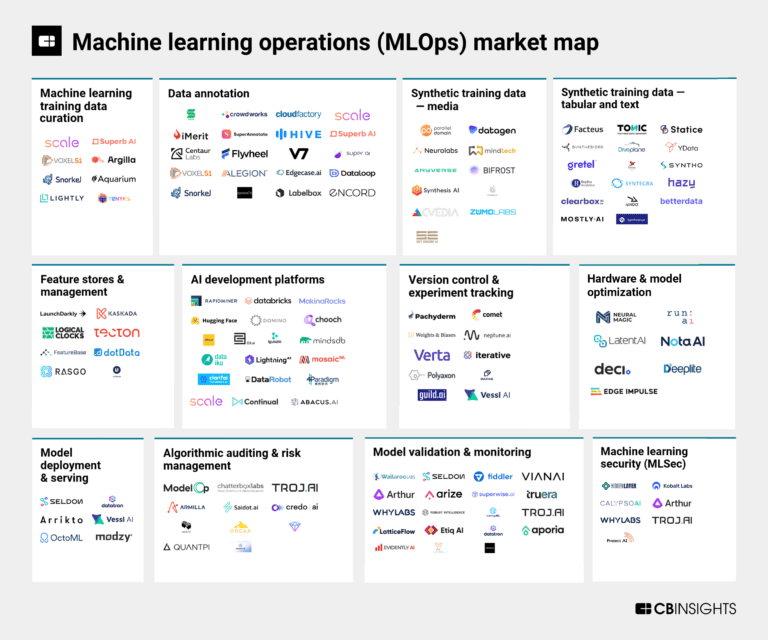

The machine learning operations (MLOps) market map

Aug 31, 2023

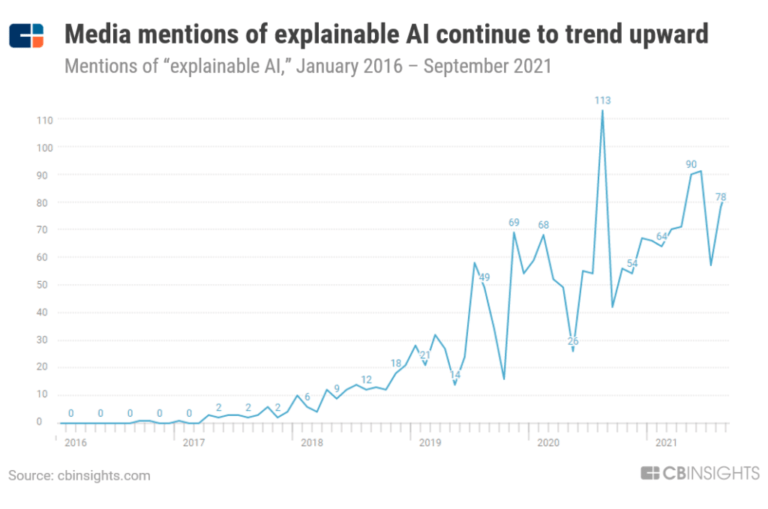

The responsible AI market map

Apr 11, 2022

The top 100 AI startups of 2021: Where are they now?Expert Collections containing Weights & Biases

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Weights & Biases is included in 4 Expert Collections, including Unicorns- Billion Dollar Startups.

Unicorns- Billion Dollar Startups

1,244 items

Artificial Intelligence

14,767 items

Companies developing artificial intelligence solutions, including cross-industry applications, industry-specific products, and AI infrastructure solutions.

AI 100

200 items

Winners of CB Insights' 5th annual AI 100, a list of the 100 most promising private AI companies in the world.

AI 100 (2024)

100 items

Latest Weights & Biases News

Sep 19, 2024

| Amazon Web Services Published: This post is co-written with Meta’s PyTorch team. In today’s rapidly evolving AI landscape, businesses are constantly seeking ways to use advanced large language models (LLMs) for their specific needs. Although foundation models (FMs) offer impressive out-of-the-box capabilities, true competitive advantage often lies in deep model customization through fine-tuning. However, fine-tuning LLMs for complex tasks typically requires advanced AI expertise to align and optimize them effectively. Recognizing this challenge, Meta developed torchtune , a PyTorch-native library that simplifies authoring, fine-tuning, and experimenting with LLMs, making it more accessible to a broader range of users and applications. In this post, AWS collaborates with Meta’s PyTorch team to showcase how you can use Meta’s torchtune library to fine-tune Meta Llama-like architectures while using a fully-managed environment provided by Amazon SageMaker Training . We demonstrate this through a step-by-step implementation of model fine-tuning, inference, quantization, and evaluation. We perform the steps on a Meta Llama 3.1 8B model utilizing the LoRA fine-tuning strategy on a single p4d.24xlarge worker node (providing 8 Nvidia A100 GPUs). Before we dive into the step-by-step guide, we first explored the performance of our technical stack by fine-tuning a Meta Llama 3.1 8B model across various configurations and instance types. As can be seen in the following chart, we found that a single p4d.24xlarge delivers 70% higher performance than two g5.48xlarge instances (each with 8 NVIDIA A10 GPUs) at almost 47% reduced price. We therefore have optimized the example in this post for a p4d.24xlarge configuration. However, you could use the same code to run single-node or multi-node training on different instance configurations by changing the parameters passed to the SageMaker estimator . You could further optimize the time for training in the following graph by using a SageMaker managed warm pool and accessing pre-downloaded models using Amazon Elastic File System (Amazon EFS). Challenges with fine-tuning LLMs Generative AI models offer many promising business use cases. However, to maintain factual accuracy and relevance of these LLMs to specific business domains, fine-tuning is required. Due to the growing number of model parameters and the increasing context length of modern LLMs, this process is memory intensive. To address these challenges, fine-tuning strategies like LoRA (Low-Rank Adaptation) and QLoRA (Quantized Low-Rank Adaptation) limit the number of trainable parameters by adding low-rank parallel structures to the transformer layers. This enables you to train LLMs even on systems with low memory availability like commodity GPUs. However, this leads to an increased complexity because new dependencies have to be handled and training recipes and hyperparameters need to be adapted to the new techniques. What businesses need today is user-friendly training recipes for these popular fine-tuning techniques, which provide abstractions to the end-to-end tuning process, addressing the common pitfalls in the most opinionated way. How does torchtune helps? torchtune is a PyTorch-native library that aims to democratize and streamline the fine-tuning process for LLMs. By doing so, it makes it straightforward for researchers, developers, and organizations to adapt these powerful LLMs to their specific needs and constraints. It provides training recipes for a variety of fine-tuning techniques, which can be configured through YAML files. The recipes implement common fine-tuning methods (full-weight, LoRA, QLoRA) as well as other common tasks like inference and evaluation. They automatically apply a set of important features ( FSDP , activation checkpointing, gradient accumulation, mixed precision) and are specific to a given model family (such as Meta Llama 3/3.1 or Mistral) as well as compute environment (single-node vs. multi-node). Additionally, torchtune integrates with major libraries and frameworks like Hugging Face datasets , EleutherAI’s Eval Harness , and Weights & Biases. This helps address the requirements of the generative AI fine-tuning lifecycle, from data ingestion and multi-node fine-tuning to inference and evaluation. The following diagram shows a visualization of the steps we describe in this post. Refer to the installation instructions and PyTorch documentation to learn more about torchtune and its concepts. Solution overview This post demonstrates the use of SageMaker Training for running torchtune recipes through task-specific training jobs on separate compute clusters. SageMaker Training is a comprehensive, fully managed ML service that enables scalable model training. It provides flexible compute resource selection, support for custom libraries, a pay-as-you-go pricing model, and self-healing capabilities. By managing workload orchestration, health checks, and infrastructure, SageMaker helps reduce training time and total cost of ownership. The solution architecture incorporates the following key components to enhance security and efficiency in fine-tuning workflows: Security enhancement – Training jobs are run within private subnets of your virtual private cloud (VPC), significantly improving the security posture of machine learning (ML) workflows. Efficient storage solution – Amazon EFS is used to accelerate model storage and access across various phases of the ML workflow. Customizable environment – We use custom containers in training jobs. The support in SageMaker for custom containers allows you to package all necessary dependencies, specialized frameworks, and libraries into a single artifact, providing full control over your ML environment. The following diagram illustrates the solution architecture. Users initiate the process by calling the SageMaker control plane through APIs or command line interface (CLI) or using the SageMaker SDK for each individual step. In response, SageMaker spins up training jobs with the requested number and type of compute instances to run specific tasks. Each step defined in the diagram accesses torchtune recipes from an Amazon Simple Storage Service (Amazon S3) bucket and uses Amazon EFS to save and access model artifacts across different stages of the workflow. By decoupling every torchtune step, we achieve a balance between flexibility and integration, allowing for both independent execution of steps and the potential for automating this process using seamless pipeline integration. In this use case, we fine-tune a Meta Llama 3.1 8B model with LoRA . Subsequently, we run model inference, and optionally quantize and evaluate the model using torchtune and SageMaker Training. Recipes, configs, datasets, and prompt templates are completely configurable and allow you to align torchtune to your requirements. To demonstrate this, we use a custom prompt template in this use case and combine it with the open source dataset Samsung/samsum from the Hugging Face hub. We fine-tune the model using torchtune’s multi device LoRA recipe (lora_finetune_distributed) and use the SageMaker customized version of Meta Llama 3.1 8B default config (llama3_1/8B_lora). Prerequisites You need to complete the following prerequisites before you can run the SageMaker Jupyter notebooks: Create a Hugging Face access token to get access to the gated repo meta-llama/Meta-Llama-3.1-8B on Hugging Face. Create a Weights & Biases API key to access the Weights & Biases dashboard for logging and monitoring Request a SageMaker service quota for 1x ml.p4d.24xlarge and 1xml.g5.2xlarge. Create an AWS Identity and Access Management (IAM) role with managed policies AmazonSageMakerFullAccess, AmazonEC2FullAccess, AmazonElasticFileSystemFullAccess, and AWSCloudFormationFullAccess to give required access to SageMaker to run the examples. (This is for demonstration purposes. You should adjust this to your specific security requirements for production.) Create an Amazon SageMaker Studio domain (see Quick setup to Amazon SageMaker ) to access Jupyter notebooks with the preceding role. Refer to the instructions to set permissions for Docker build. Log in to the notebook console and clone the GitHub repo: $ git clone https://github.com/aws-samples/sagemaker-distributed-training-workshop.git$ cd sagemaker-distributed-training-workshop/13-torchtune Run the notebook ipynb to set up VPC and Amazon EFS using an AWS CloudFormation stack. Review torchtune configs The following figure illustrates the steps in our workflow. You can look up the torchtune configs for your use case by directly using the tune CLI .For this post, we provide modified config files aligned with SageMaker directory path’s structure: sh-4.2$ cd config/sh-4.2$ ls -ltr-rw-rw-r-- 1 ec2-user ec2-user 1151 Aug 26 18:34 config_l3.1_8b_gen_orig.yaml-rw-rw-r-- 1 ec2-user ec2-user 1172 Aug 26 18:34 config_l3.1_8b_gen_trained.yaml-rw-rw-r-- 1 ec2-user ec2-user 644 Aug 26 18:49 config_l3.1_8b_quant.yaml-rw-rw-r-- 1 ec2-user ec2-user 2223 Aug 28 14:53 config_l3.1_8b_lora.yaml-rw-rw-r-- 1 ec2-user ec2-user 1223 Sep 4 14:28 config_l3.1_8b_eval_trained.yaml-rw-rw-r-- 1 ec2-user ec2-user 1213 Sep 4 14:29 config_l3.1_8b_eval_original.yaml torchtune uses these config files to select and configure the components (think models and tokenizers) during the execution of the recipes. Build the container As part of our example, we create a custom container to provide custom libraries like torch nightlies and torchtune. Complete the following steps: sh-4.2$ cat Dockerfile# Set the default value for the REGION build argumentARG REGION=us-west-2# SageMaker PyTorch image for TRAININGFROM ${ACCOUNTID}.dkr.ecr.${REGION}.amazonaws.com/pytorch-training:2.3.0-gpu-py311-cu121-ubuntu20.04-sagemaker# Uninstall existing PyTorch packagesRUN pip uninstall torch torchvision transformer-engine -y# Install latest release of PyTorch and torchvisionRUN pip install --force-reinstall torch==2.4.1 torchao==0.4.0 torchvision==0.19.1 Run the 1_build_container.ipynb notebook until the following command to push this file to your ECR repository: !sm-docker build . --repository accelerate:latest sm-docker is a CLI tool designed for building Docker images in SageMaker Studio using AWS CodeBuild . We install the library as part of the notebook. Next, we will run the 2_torchtune-llama3_1.ipynb notebook for all fine-tuning workflow tasks. For every task, we review three artifacts: torchtune configuration file SageMaker task output Run the fine-tuning job The following code shows a shortened torchtune recipe configuration highlighting a few key components of the file for a fine-tuning job: Model component including LoRA rank configuration Meta Llama 3 tokenizer to tokenize the data Checkpointer to read and write checkpoints Dataset component to load the dataset sh-4.2$ cat config_l3.1_8b_lora.yaml# Model Argumentsmodel: _component_: torchtune.models.llama3_1.lora_llama3_1_8b lora_attn_modules: ['q_proj', 'v_proj'] lora_rank: 8 lora_alpha: 16# Tokenizertokenizer: _component_: torchtune.models.llama3.llama3_tokenizer path: /opt/ml/input/data/model/hf-model/original/tokenizer.modelcheckpointer: _component_: torchtune.utils.FullModelMetaCheckpointer checkpoint_files: [ consolidated.00.pth ] …# Dataset and Samplerdataset: _component_: torchtune.datasets.samsum_dataset train_on_input: Truebatch_size: 13# Trainingepochs: 1gradient_accumulation_steps: 2... and more ... We use Weights & Biases for logging and monitoring our training jobs, which helps us track our model’s performance: metric_logger:_component_: torchtune.utils.metric_logging.WandBLogger… Next, we define a SageMaker task that will be passed to our utility function in the script create_pytorch_estimator. This script creates the PyTorch estimator with all the defined parameters. In the task, we use the lora_finetune_distributed torchrun recipe with config config-l3.1-8b-lora.yaml on an ml.p4d.24xlarge instance. Make sure you download the base model from Hugging Face before it’s fine-tuned using the use_downloaded_model parameter. The image_uri parameter defines the URI of the custom container. sagemaker_tasks={ "fine-tune":{ "hyperparameters":{ "tune_config_name":"config-l3.1-8b-lora.yaml", "tune_action":"fine-tune", "use_downloaded_model":"false", "tune_recipe":"lora_finetune_distributed" }, "instance_count":1, "instance_type":"ml.p4d.24xlarge", "image_uri":"<accountid>.dkr.ecr.<region>.amazonaws.com/accelerate:latest" } ... and more ...} To create and run the task, run the following code: Task="fine-tune"estimator=create_pytorch_estimator(**sagemaker_tasks[Task])execute_task(estimator) The following code shows the task output and reported status: # Refer-Output2024-08-16 17:45:32 Starting - Starting the training job.........1|140|Loss: 1.4883038997650146: 99%|█████████▉| 141/142 [06:26<00:02, 2.47s/it]1|141|Loss: 1.4621509313583374: 99%|█████████▉| 141/142 [06:26<00:02, 2.47s/it]Training completed with code: 02024-08-26 14:19:09,760 sagemaker-training-toolkit INFO Reporting training SUCCESS The final model is saved to Amazon EFS, which makes it available without download time penalties. Monitor the fine-tuning job You can monitor various metrics such as loss and learning rate for your training run through the Weights & Biases dashboard. The following figures show the results of the training run where we tracked GPU utilization, GPU memory utilization, and loss curve. For the following graph, to optimize memory usage, torchtune uses only rank 0 to initially load the model into CPU memory. rank 0 therefore will be responsible for loading the model weights from the checkpoint. The example is optimized to use GPU memory to its maximum capacity. Increasing the batch size further will lead to CUDA out-of-memory (OOM) errors. The run took about 13 minutes to complete for one epoch, resulting in the loss curve shown in the following graph. Run the model generation task In the next step, we use the previously fine-tuned model weights to generate the answer to a sample prompt and compare it to the base model. The following code shows the configuration of the generate recipe config_l3.1_8b_gen_trained.yaml. The following are key parameters: FullModelMetaCheckpointer – We use this to load the trained model checkpoint meta_model_0.pt from Amazon EFS CustomTemplate.SummarizeTemplate – We use this to format the prompt for inference # torchtune - trained model generation config - config_l3.1_8b_gen_trained.yamlmodel: _component_: torchtune.models.llama3_1.llama3_1_8bcheckpointer: _component_: torchtune.utils.FullModelMetaCheckpointer checkpoint_dir: /opt/ml/input/data/model/ checkpoint_files: [ meta_model_0.pt ] …# Generation arguments; defaults taken from gpt-fastinstruct_template: CustomTemplate.SummarizeTemplate... and more ... Next, we configure the SageMaker task to run on a single ml.g5.2xlarge instance: prompt=r'{"dialogue":"Amanda: I baked cookies. Do you want some?rnJerry: Sure rnAmanda: I will bring you tomorrow :-)"}'sagemaker_tasks={ "generate_inference_on_trained":{ "hyperparameters":{ "tune_config_name":"config_l3.1_8b_gen_trained.yaml ", "tune_action":"generate-trained", "use_downloaded_model":"true", "prompt":json.dumps(prompt) }, "instance_count":1, "instance_type":"ml.g5.2xlarge", "image_uri":"<accountid>.dkr.ecr.<region>.amazonaws.com/accelerate:latest" }} In the output of the SageMaker task, we see the model summary output and some stats like tokens per second: #Refer- Output...Amanda: I baked cookies. Do you want some?rnJerry: Sure rnAmanda: I will bring you tomorrow :-)Summary:Amanda baked cookies. She will bring some to Jerry tomorrow.INFO:torchtune.utils.logging:Time for inference: 1.71 sec total, 7.61 tokens/secINFO:torchtune.utils.logging:Memory used: 18.32 GB... and more ... We can generate inference from the original model using the original model artifact consolidated.00.pth: # torchtune - trained original generation config - config_l3.1_8b_gen_orig.yaml…checkpointer: _component_: torchtune.utils.FullModelMetaCheckpointer checkpoint_dir: /opt/ml/input/data/model/hf-model/original/ checkpoint_files: [ consolidated.00.pth ]... and more ... The following code shows the comparison output from the base model run with the SageMaker task (generate_inference_on_original). We can see that the fine-tuned model is performing subjectively better than the base model by also mentioning that Amanda baked the cookies. # Refer-Output---Summary:Jerry tells Amanda he wants some cookies. Amanda says she will bring him some cookies tomorrow.... and more ... Run the model quantization task To speed up the inference and decrease the model artifact size, we can apply post-training quantization. torchtune relies on torchao for post-training quantization . We configure the recipe to use Int8DynActInt4WeightQuantizer, which refers to int8 dynamic per token activation quantization combined with int4 grouped per axis weight quantization. For more details, refer to the torchao implementation . # torchtune model quantization config - config_l3.1_8b_quant.yamlmodel: _component_: torchtune.models.llama3_1.llama3_1_8bcheckpointer: _component_: torchtune.utils.FullModelMetaCheckpointer …quantizer: _component_: torchtune.utils.quantization.Int8DynActInt4WeightQuantizer groupsize: 256 We again use a single ml.g5.2xlarge instance and use SageMaker warm pool configuration to speed up the spin-up time for the compute nodes: sagemaker_tasks={"quantize_trained_model":{ "hyperparameters":{ "tune_config_name":"config_l3.1_8b_quant.yaml", "tune_action":"run-quant", "use_downloaded_model":"true" }, "instance_count":1, "instance_type":"ml.g5.2xlarge", "image_uri":"<accountid>.dkr.ecr.<region>.amazonaws.com/accelerate:latest" }} In the output, we see the location of the quantized model and how much memory we saved due to the process: #Refer-Output...linear: layers.31.mlp.w1, in=4096, out=14336linear: layers.31.mlp.w2, in=14336, out=4096linear: layers.31.mlp.w3, in=4096, out=14336linear: output, in=4096, out=128256INFO:torchtune.utils.logging:Time for quantization: 7.40 secINFO:torchtune.utils.logging:Memory used: 22.97 GBINFO:torchtune.utils.logging:Model checkpoint of size 8.79 GB saved to /opt/ml/input/data/model/quantized/meta_model_0-8da4w.pt... and more ... You can run model inference on the quantized model meta_model_0-8da4w.pt by updating the inference-specific configurations . Run the model evaluation task Finally, let’s evaluate our fine-tuned model in an objective manner by running an evaluation on the validation portion of our dataset. For our evaluation, we use a custom task for the evaluation harness to evaluate the dialogue summarizations using the rouge metrics. The recipe configuration points the evaluation harness to our custom evaluation task: # torchtune trained model evaluation config - config_l3.1_8b_eval_trained.yamlmodel:...include_path: "/opt/ml/input/data/config/tasks"tasks: ["samsum"]... The following code is the SageMaker task that we run on a single ml.p4d.24xlarge instance: sagemaker_tasks={"evaluate_trained_model":{ "hyperparameters":{ "tune_config_name":"config_l3.1_8b_eval_trained.yaml", "tune_action":"run-eval", "use_downloaded_model":"true", }, "instance_count":1, "instance_type":"ml.p4d.24xlarge", }} Run the model evaluation on ml.p4d.24xlarge: Task="evaluate_trained_model"estimator=create_pytorch_estimator(**sagemaker_tasks[Task])execute_task(estimator) The following tables show the task output for the fine-tuned model as well as the base model. The following output is for the fine-tuned model. Tasks Conclusion In this post, we discussed how you can fine-tune Meta Llama-like architectures using various fine-tuning strategies on your preferred compute and libraries, using custom dataset prompt templates with torchtune and SageMaker. This architecture gives you a flexible way of running fine-tuning jobs that are optimized for GPU memory and performance. We demonstrated this through fine-tuning a Meta Llama3.1 model using P4 and G5 instances on SageMaker and used observability tools like Weights & Biases to monitor loss curve, as well as CPU and GPU utilization. We encourage you to use SageMaker training capabilities and Meta’s torchtune library to fine-tune Meta Llama-like architectures for your specific business use cases. To stay informed about upcoming releases and new features, refer to the torchtune GitHub repo and the official Amazon SageMaker training documentation . Special thanks to Kartikay Khandelwal (Software Engineer at Meta), Eli Uriegas (Engineering Manager at Meta), Raj Devnath (Sr. Product Manager Technical at AWS) and Arun Kumar Lokanatha (Sr. ML Solution Architect at AWS) for their support to the launch of this post. About the Authors Kanwaljit Khurmi is a Principal Solutions Architect at Amazon Web Services. He works with AWS customers to provide guidance and technical assistance, helping them improve the value of their solutions when using AWS. Kanwaljit specializes in helping customers with containerized and machine learning applications. Roy Allela is a Senior AI/ML Specialist Solutions Architect at AWS.He helps AWS customers—from small startups to large enterprises—train and deploy large language models efficiently on AWS. Matthias Reso is a Partner Engineer at PyTorch working on open source, high-performance model optimization, distributed training ( FSDP ), and inference. He is a co-maintainer of llama-recipes and TorchServe . Trevor Harvey is a Principal Specialist in Generative AI at Amazon Web Services (AWS) and an AWS Certified Solutions Architect – Professional. He serves as a voting member of the PyTorch Foundation Governing Board, where he contributes to the strategic advancement of open-source deep learning frameworks. At AWS, Trevor works with customers to design and implement machine learning solutions and leads go-to-market strategies for generative AI services.

Weights & Biases Frequently Asked Questions (FAQ)

When was Weights & Biases founded?

Weights & Biases was founded in 2017.

Where is Weights & Biases's headquarters?

Weights & Biases's headquarters is located at 400 Alabama Street, San Francisco.

What is Weights & Biases's latest funding round?

Weights & Biases's latest funding round is Series C - II.

How much did Weights & Biases raise?

Weights & Biases raised a total of $250M.

Who are the investors of Weights & Biases?

Investors of Weights & Biases include Coatue, Bloomberg Beta, Insight Partners, Nat Friedman, Bond and 12 more.

Who are Weights & Biases's competitors?

Competitors of Weights & Biases include Fiddler AI, 2021.AI, Verta, Etiq AI, deepset and 7 more.

Loading...

Compare Weights & Biases to Competitors

Comet is a company that focuses on machine learning and operates in the technology sector. The company offers a platform that integrates with existing infrastructure and tools to manage, visualize, and optimize machine learning models. This includes tracking code, hyperparameters, metrics, and more for training runs, as well as monitoring the performance of models in production. It was founded in 2017 and is based in New York, New York.

Neptune Labs is a company that specializes in machine learning operations (MLOps), operating within the technology and artificial intelligence industry. The company provides a platform that allows users to log, store, organize, compare, and share machine learning model metadata. This includes features such as experiment tracking, model versioning, and collaboration tools, all designed to streamline and enhance machine learning workflows. It was founded in 2017 and is based in Palo Alto, California.

Seldon specializes in machine learning operations (MLOps) solutions, focusing on the deployment and management of machine learning models for enterprise companies. The company offers a software framework that enables businesses to deploy, monitor, and manage machine learning models. Seldon's products cater to a variety of industries that require robust machine learning operations, including financial services, automotive, and insurance sectors. It was founded in 2014 and is based in Shoreditch, United Kingdom.

Apres was a company focused on making artificial intelligence safe and accessible through explainability within the AI sector. They offered a product named Engaged AI, which served as an operating system for AI-driven organizations, aimed at accelerating AI improvement by uncovering hidden data insights, providing detailed explanations for AI model decisions, and ensuring transparency in AI systems. The company primarily catered to large banks, insurance firms, and tech companies seeking AI solutions for issues like fraud detection, customer retention, bias elimination, compliance, and more. It was founded in 2018 and is based in San Francisco, California.

Modzy is a company that focuses on providing a production platform for machine learning, operating within the technology and artificial intelligence industry. The company offers services that allow for the deployment, connection, and operation of machine learning models in various environments, with a focus on enterprise and edge computing. These services are primarily targeted towards sectors such as manufacturing, telecom, energy and utilities, and the public sector. Modzy was formerly known as Yzdom, Inc.. It was founded in 2019 and is based in Vienna, Virginia.

Datatron is an enterprise AI platform focused on streamlining machine learning operations and governance workflows. The company offers a flexible MLOps platform that enables businesses to deploy, manage, and monitor AI models efficiently, with a strong emphasis on enterprise scalability and security. Datatron's solutions cater to various stakeholders including business executives, data scientists, and engineering/DevOps teams, providing tools for AI model monitoring, governance, and operational management without the need for manual scripting or ad hoc processes. It was founded in 2016 and is based in San Francisco, California.

Loading...