Automation Anywhere

Founded Year

2003Stage

Loan | AliveTotal Raised

$1.049BLast Raised

$200M | 2 yrs agoMosaic Score The Mosaic Score is an algorithm that measures the overall financial health and market potential of private companies.

-62 points in the past 30 days

About Automation Anywhere

Automation Anywhere specializes in intelligent automation solutions. It focuses on artificial intelligence (AI) driven organizational processes. The company provides a platform encompassing process discovery, robotic process automation (RPA), process orchestration, document processing, and analytics with security and governance. The platform is designed to enhance productivity, foster innovation, and improve customer service for businesses globally. Automation Anywhere was formerly known as Tethys Solutions. It was founded in 2003 and is based in San Jose, California.

Loading...

ESPs containing Automation Anywhere

The ESP matrix leverages data and analyst insight to identify and rank leading companies in a given technology landscape.

The intelligent document processing (IDP) market is focused on automating the processing of unstructured data, such as documents, emails, images and videos. This is achieved through the use of AI and machine learning technologies that can extract relevant information from these sources and integrate it into workflows. The IDP market is growing rapidly as businesses seek to reduce manual processing…

Automation Anywhere named as Leader among 15 other companies, including Oracle, NEC, and Iron Mountain.

Loading...

Research containing Automation Anywhere

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Automation Anywhere in 8 CB Insights research briefs, most recently on Dec 14, 2022.

Expert Collections containing Automation Anywhere

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Automation Anywhere is included in 8 Expert Collections, including Unicorns- Billion Dollar Startups.

Unicorns- Billion Dollar Startups

1,244 items

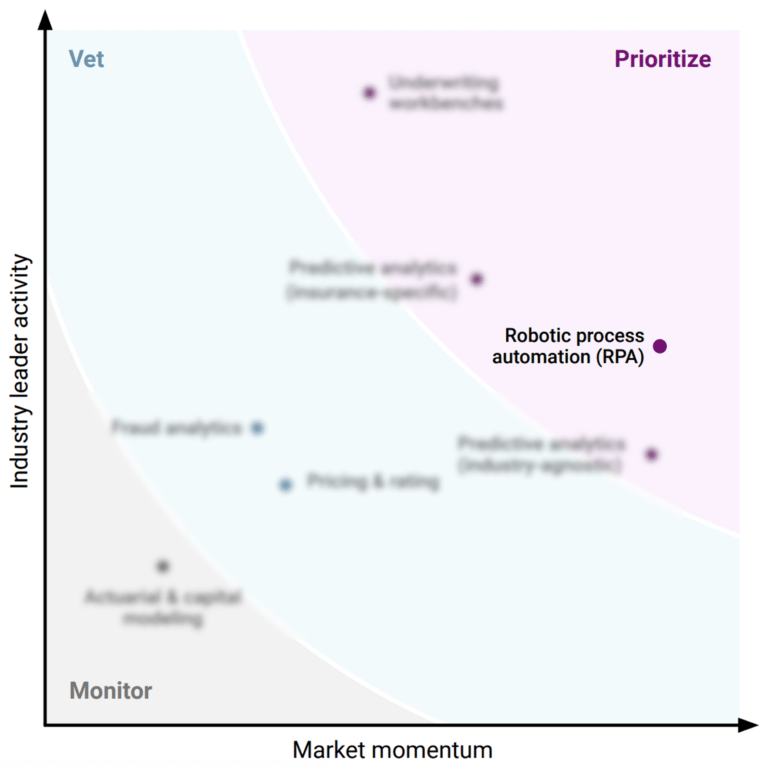

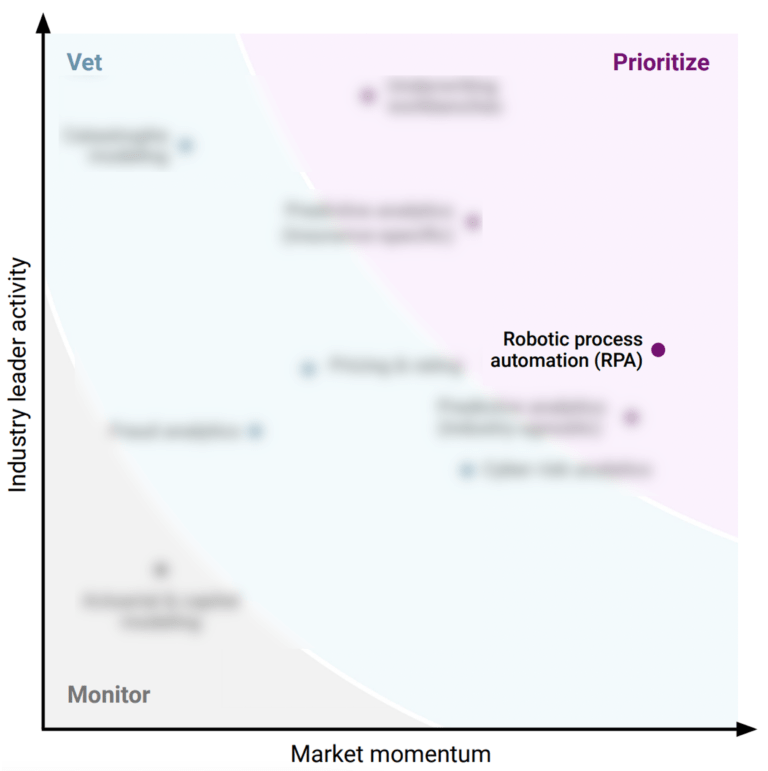

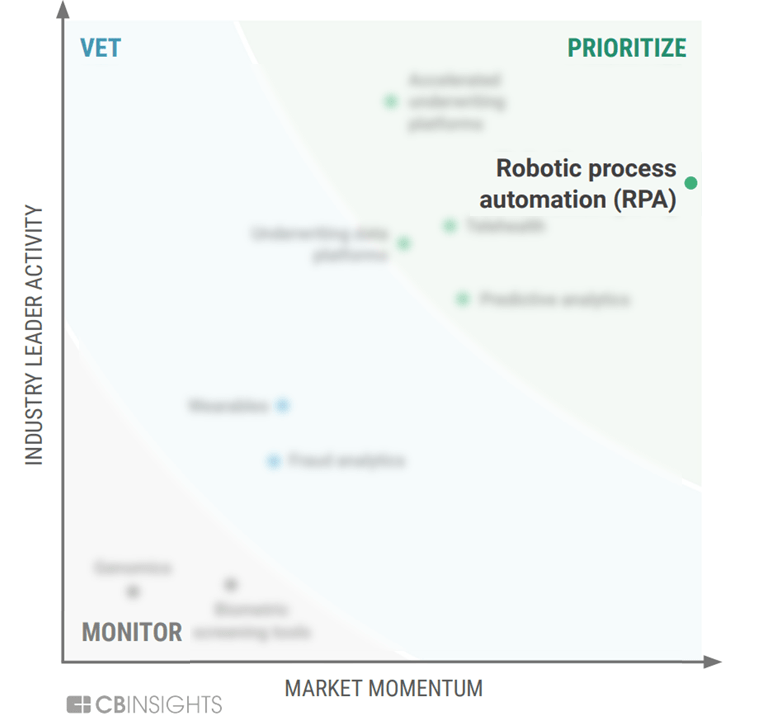

Robotic Process Automation

322 items

RPA refers to the software-enabled automation of data-intensive tasks that are low-skill but highly sensitive operationally, including data entry, transaction processing, and compliance.

Tech IPO Pipeline

568 items

AI 100

100 items

Artificial Intelligence

14,769 items

Companies developing artificial intelligence solutions, including cross-industry applications, industry-specific products, and AI infrastructure solutions.

Conference Exhibitors

5,302 items

Automation Anywhere Patents

Automation Anywhere has filed 77 patents.

The 3 most popular patent topics include:

- information technology management

- video game development

- ibm mainframe operating systems

Application Date | Grant Date | Title | Related Topics | Status |

|---|---|---|---|---|

10/31/2019 | 6/25/2024 | Enterprise application integration, Office suites, Web applications, Information technology management, Data management | Grant |

Application Date | 10/31/2019 |

|---|---|

Grant Date | 6/25/2024 |

Title | |

Related Topics | Enterprise application integration, Office suites, Web applications, Information technology management, Data management |

Status | Grant |

Latest Automation Anywhere News

Sep 17, 2024

To embed, copy and paste the code into your website or blog: <iframe frameborder="1" height="620" scrolling="auto" src="//www.jdsupra.com/post/contentViewerEmbed.aspx?fid=91a257e2-61b1-4917-a73a-c74326a6b649" style="border: 2px solid #ccc; overflow-x:hidden !important; overflow:hidden;" width="100%"></iframe> Womble Bond Dickinson’s content series— Innovation Interchange: The Power of Cross-Industry Insight —explores emerging challenges from the viewpoint of trendsetting industries. As part of this series, WBD Partner Jay Silver moderated a panel discussion on AI governance with Unilever Chief Privacy Officer Christine J. Lee, Datadog Privacy Counsel Erin Park, Automation Anywhere Director of Legal Product & Privacy Counsel Shannon Salerno, and WBD Partner Taylor Ey. In an era where technology is rapidly reshaping industries, AI stands at the forefront, promising unparalleled efficiency and innovation. But as AI tools become integral to business operations, the need for robust AI governance becomes crucial. Tech executives and in-house counsel are now faced with the challenge of building and implementing frameworks that ensure responsible AI use while meeting regulatory and contractual obligations. The rise of generative AI amplifies these challenges, introducing new risks that extend beyond traditional boundaries. How do companies evaluate these risks, and what frameworks are necessary to mitigate them? And how do companies develop AI governance programs that not only identify and address these risks but also integrate seamlessly with existing systems? The answers to these questions will determine which businesses stay competitive and responsible in the digital age. Building AI Governance Guardrails The rise of generative AI in the workplace has greatly increased the need for companies to develop frameworks for responsible AI use. Generative AI expands the possible risk to a greater range of people, which means companies should introduce new policies and procedures to cover those new AI users. Salerno said, “You have to frequently reevaluate your framework as new technologies, such as generative AI, come out. One of the questions you have to ask is, ‘What risk does the new technology introduce?’" “You have to frequently reevaluate your framework as new technologies, such as generative AI, come out. One of the questions you have to ask is, ‘What risk does the new technology introduce?’" SHANNON SALERNO, DIRECTOR OF LEGAL PRODUCT & PRIVACY COUNSEL, AUTOMATION ANYWHERE Park said an AI Governance program needs to satisfy three requirements: 1. It must accurately identify and mitigate risk; 2. It must help the company meet its regulatory requirements; and 3. It must satisfy the operation’s contractual obligations. “The framework we implemented allows us to responsibly integrate AI tools into our processes and for our employees to leverage AI to enhance productivity,” she said. Park also noted it is important to get feedback from internal users so the company can continuously evolve its AI governance framework. AI in the Workplace Presents Challenges Lee noted that issues such bias, effectiveness, and privacy are common risks for companies in employing AI. “There’s also the governance or legal risks. That’s where we use the framework we’ve built to have the right stakeholders asking the right questions at the right time,” she said, adding that one of the most challenging pieces is to monitor tools as they interact with consumers. Park said companies need to ask three general questions related to risk mitigation: How are users using AI tools? Are these tools used to develop company products and if so, what does this mean for security, IP, and other issues that may have to be addressed in new contract terms? Do we have all the appropriate stakeholders in the company involved in assessing the risks? “On the privacy side, this is not new.” Ey said. For example, GDPR (the EU’s privacy law that took effect over 6 years ago in 2018) addresses use of automated decision-making tools, which may apply when using generative AI tools to process employee or applicant data. A number of U.S. states also have addressed AI-related privacy concerns. “Data privacy lawyers have been looking at technology that processes personal data—this is on our radar.” “On the privacy side, this is not new. Data privacy lawyers have been looking at technology that processes private data—this is on our radar.” TAYLOR EY, PARTNER, WOMBLE BOND DICKINSON Salerno said an AI governance program should address a number of key privacy-related issues. “You must have a limited purpose for processing data, and AI may not need that data. If AI doesn’t need the data for a processing purpose, it shouldn’t have access to that data,” she said. Also, companies need look at data transfer clauses. Data may not be able to leave a specific country—such data location restrictions could create issues if data is processed outside of a permitted country through the use of AI. Salerno said companies should ask, “Is AI going to learn from this data? If so, can the data be removed if need be (such as in the event of a data subject request)?” She said it may be better to not enter secure or private data into an AI system in the first place. In addition, AI users should make sure their systems are secure from cyberattacks that could put private information at risk. Implementing an AI Governance Framework Once risks are identified and an AI governance program designed, it must be implemented in a real-world setting. Park said, “The first step in building an AI governance framework is having a risk assessment standard taking into consideration industry standards, such as the NIST AI Risk Management Framework and the OECD AI Risk Evaluation Framework .” Such risk assessment standards should be tailored to the company’s specific needs and risk profile. The second step is to integrate the risk assessment standard into existing workflows and processes. During this step, Park said it is important to socialize the terms of the AI governance framework. Employees need to learn to identify risks and should have an avenue to provide feedback. “The feedback loop and recalibration of risk is important,” she said. But having such a loop requires continuous communication between stakeholders. For example: A vendor offers an AI component to an existing tool and a team member wants to use it. “Users may want to click the button and start using it because it’s so easy,” Park said. It is important to make sure employees know to go through proper procedures before using new AI. As the AI Governance team reviews new AI tools, they should pay close attention to terms of service provided by vendors, as the terms may not reflect the risks brought on by using new AI features. Lee said transparency and appropriate human oversight is important in AI governance, particularly for consumer-facing organizations. “We’re building out our governance and we have a process for assessing AI,” she said. “We created an agile Governance Committee and what we’ve found is we had to separate governance from deployment.” AI & Third-Party Vendors Ey noted that vendor risk management is an issue of particular concern, and that the details matter in selecting and implementing third-party AI tools. Companies should also review vendor contracts when they evaluate third-party AI tools. “For example, is the vendor taking some responsibility for IP infringement? Is there a carve-out so huge you can drive a truck through it? Is there room for negotiation, or are the terms just click-through?” she asked. Ey added that if the company is helping the vendor develop an AI tool, it gives them more room to negotiate on the terms of its use. Salerno said another question to ask of vendors is, “What type of training data is being used to train the AI?” For example, companies may want to avoid using AI which was trained on open source code to assist with writing product code without other protections in place (such as a process to identify open source code), as such open source code could make its way into your code and have intellectual property implications. She also said companies need to examine any AI vendor’s data import/export clauses to make sure sensitive data or restricted technology isn’t sent to prohibited countries. Internally, companies should take steps to: (i) limit the types of prompts that can be put into a system to reduce the likelihood sensitive data is sent to AI; (ii) review disclosures in the vendor’s user interface regarding data which will be sent to AI which will put users on notice that AI will process data and enable users to follow internal AI guidelines; and (iii) review disclosures in the vendor’s user interface/output informing users that content/conversations are AI generated in order to comply with any AI content labeling requirements, alert users they are chatting with bots, and to assist with data mapping. Finally, Salerno said a cross-collaborative team should test AI systems before they are deployed company-wide. Lee said her business has broad experience with hundreds of AI tools and an existing AI assurance process. “What is good about AI is also what is challenging. Having this process in place put us in a great position but the challenge now is how do you make it ‘Business as usual’ without limiting what the business needs to do?” Tools and use cases are always evolving, as are the terms and conditions governing them. “You have to adapt your guardrails around how you are going to employ that AI tool,” Lee said. “What is good about AI is also what is challenging. Having this process in place put us in a great position but the challenge now is how do you make it ‘Business as usual’ without limiting what the business needs to do?” CHRISTINE J. LEE, CHIEF PRIVACY OFFICER, UNILEVER Lastly, she said companies need to be aware of financial limitations when considering AI tools. “Everyone wants to do everything all at once,” she said. “But that’s not practical. Companies need to consider the business case for adopting a particular AI tool. On the Regulatory Horizon In general, technological innovation moves faster than the law. But the number of laws governing data privacy is expanding—and many of those directly address the growing use of AI. “In general, there are so many new laws emerging,” Salerno said. A legal department has two missions: To keep tabs on emerging laws and when they update, and to help the company adjust to the fast-changing regulatory environment. “Privacy’s role has expanded a bit because so much of the new adoption of AI has to do with new uses of data,” Park said. “Weaving the web between what is the word of law and what these technologies mean is the big challenge.” Silver noted that as a litigator, this area seems ripe for disagreements. “Privacy’s role has expanded a bit because so much of the new adoption of AI has to do with new uses of data. Weaving the web between what is the word of law and what these technologies mean is the big challenge.” ERIN PARK, PRIVACY COUNSEL, DATADOG Ey said where possible legal and privacy departments should partner with non-legal resources within the business to keep their eyes and ears open and champion good practices. Bringing in legal or privacy teams to a project sooner can help that project move more efficiently and address potential issues early in the planning process. “We’re not saying ‘No.’ We want to help you do it better,” Ey said. Ethical Use of AI Legal compliance is one key concern. The ethical use of AI is another related but separate issue. For example, concerns have been raised about biases in AI that could negatively impact members of marginalized communities. Lee said, “Bias is a key measure of the assessment process. You learn by working your way through this one. Our starting point is our Code of Conduct principles and from there adapting those to the deployment of AI.” The key ethical question for companies to consider is, “We can do it—but should we?” Also, companies should make sure key stakeholders are on board with ethical and responsible AI concerns. But understand that when humans are involved, decisions will be subjective. “It’s an evolving process,” Lee said. Park agreed. Companies absolutely need to have an AI policy, but human review should be a key element of that policy. A key prerequisite is a companywide understanding of the new technologies and implications, and cross-functional alignment and calibration of risk tolerance. “The human component is critical to ensuring the company’s principles and ethos are upheld.” Park said. Key Takeaways Train Employees on AI Governance Principles: Employees should review guidelines and sign an agreement to abide by them. Leverage the Power of the Collective: Employ cross-collaborative reviews involving multiple departments, including Legal, IT, Procurement, and more. Focus on Transparency. Evolving regulations require transparency, but AI technology isn’t easily explainable to customers. Companies need to think through this component to get it right. Regularly Update AI Usage Policies: As AI technologies evolve, continuously refine and update your AI usage and governance policies to stay current. Conduct Frequent Audits: Regular audits should be performed to ensure compliance with AI governance standards and identify potential areas of improvement. Establish a Clear Reporting Mechanism: Implement a robust mechanism for reporting AI-related issues or unethical practices within the organization. Encourage Ethical AI Practices: Promote the development and use of AI technologies that prioritize ethical considerations and minimize biases. Engage Stakeholders at All Levels: Involve stakeholders from different levels of the organization in AI governance decisions to incorporate diverse perspectives. Monitor Regulatory Changes: Stay informed about changes in AI-related regulations and adapt your governance policies to ensure compliance. Utilize AI Decision Documentation: Document the decision-making processes of AI systems to ensure accountability and traceability.

Automation Anywhere Frequently Asked Questions (FAQ)

When was Automation Anywhere founded?

Automation Anywhere was founded in 2003.

Where is Automation Anywhere's headquarters?

Automation Anywhere's headquarters is located at 633 River Oaks Parkway, San Jose.

What is Automation Anywhere's latest funding round?

Automation Anywhere's latest funding round is Loan.

How much did Automation Anywhere raise?

Automation Anywhere raised a total of $1.049B.

Who are the investors of Automation Anywhere?

Investors of Automation Anywhere include SVB Capital, Hercules Capital, SVB Financial Group, Arctic Venture Partners, Mindrock Capital and 11 more.

Who are Automation Anywhere's competitors?

Competitors of Automation Anywhere include Redwood Software, Celaton, Celonis, Tungsten Automation, Ephesoft and 7 more.

Loading...

Compare Automation Anywhere to Competitors

WorkFusion specializes in AI-driven automation solutions for anti-money laundering (AML) risk mitigation within the financial services industry. The company offers AI Digital Workers that perform tasks such as sanctions screening, transaction monitoring, and customer due diligence to enhance compliance operations and reduce risk. These AI solutions are designed to scale team capacity, improve program efficiency, and support higher-value investigative work. WorkFusion was formerly known as Crowd Computing Systems. It was founded in 2010 and is based in New York, New York.

Nexus FrontierTech develops artificial intelligence and automation in financial services. The company offers modular plug-and-play automation solutions enabling decision-making processes, managing operations, and performance by bringing visibility, traceability, and usability to enterprise data in real time. It primarily serves the financial services industry, government organizations, and other sectors that require efficient development of structured processes for compliance, risk management, and innovation. It was formerly known as Innovatube. It was founded in 2015 and is based in London, United Kingdom.

Hyperscience is an enterprise AI platform provider specializing in intelligent document processing and hyperautomation technologies. The company offers solutions that transform unstructured content into structured, actionable data, enabling businesses to automate complex, mission-critical processes. Hyperscience serves various sectors, including financial services, government agencies, and insurance companies. It was founded in 2014 and is based in New York, New York.

JIFFY.ai specializes in AI-driven intelligent automation solutions within the technology sector, focusing on no-code platforms for enterprise digital transformation. The company offers a suite of pre-built HyperApps and tools for end-to-end process automation, designed to enhance operational efficiency and customer experience across various industries. JIFFY.ai primarily serves sectors such as banking, wealth management, media, and corporate finance. JIFFY.ai was formerly known as Option3. It was founded in 2018 and is based in Milpitas, California.

ElectroNeek focuses on workflow automation and integration within the enterprise software industry. It offers a platform that enables businesses to automate repetitive processes using robotic process automation (RPA), artificial intelligence (AI), and intelligent document processing (IDP). ElectroNeek primarily serves sectors that require process automation and document handling, such as the e-commerce, construction, and healthcare industries. It was founded in 2019 and is based in Cedar Park, Texas.

Tungsten Automation specializes in intelligent automation and digital workflow transformation within the technology sector. The company offers artificial intelligence (AI)-powered software solutions that automate data-intensive workflows for various business-critical applications. Tungsten Automation was formerly known as Kofax. It was founded in 1985 and is based in Irvine, California.

Loading...