Safe Superintelligence

Founded Year

2024Stage

Series A | AliveTotal Raised

$1BValuation

$0000Last Raised

$1B | 21 days agoAbout Safe Superintelligence

Safe Superintelligence focuses on the development of safe superintelligence as its primary objective within the artificial intelligence industry. The company is dedicated to solving technical problems related to safety and capabilities through innovative engineering and scientific research, to advance these capabilities while prioritizing safety. Safe Superintelligence operates with a business model that emphasizes long-term progress over short-term commercial pressures. It was founded in 2024 and is based in San Francisco, California.

Loading...

Loading...

Research containing Safe Superintelligence

Get data-driven expert analysis from the CB Insights Intelligence Unit.

CB Insights Intelligence Analysts have mentioned Safe Superintelligence in 1 CB Insights research brief, most recently on Sep 5, 2024.

Expert Collections containing Safe Superintelligence

Expert Collections are analyst-curated lists that highlight the companies you need to know in the most important technology spaces.

Safe Superintelligence is included in 2 Expert Collections, including Artificial Intelligence.

Artificial Intelligence

14,767 items

Companies developing artificial intelligence solutions, including cross-industry applications, industry-specific products, and AI infrastructure solutions.

Unicorns- Billion Dollar Startups

1,244 items

Latest Safe Superintelligence News

Sep 18, 2024

Rosenberg/Midjourney Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More Two weeks ago, OpenAI’s former chief scientist Ilya Sutskever raised $1 billion to back his newly formed company, Safe Superintelligence (SSI). The startup aims to safely build AI systems that exceed human cognitive capabilities. Just a few months before that, Elon Musk’s startup xAI raised $6 billion to pursue superintelligence, a goal Musk predicts will be achieved within five or six years . These are staggering rounds of funding for newly formed companies, and it only adds to the many billions already poured into OpenAI, Anthropic and other firms racing to build superintelligence. As a longtime researcher in this field, I agree with Musk that superintelligence will be achieved within years, not decades, but I am skeptical that it can be achieved safely. Instead, I believe we must view this milestone as an “evolutionary pressu r e point ” for humanity — one in which our fitness as a species will be challenged by superior intelligences with interests that will eventually conflict with our own. I often compare this milestone to the arrival of an advanced alien species from another planet and point out the “ Arrival Mind Paradox ” — the fact that we would fear a superior alien intelligence far more than we fear the superior intelligences we’re currently building here on earth. This is because most people wrongly believe we are crafting AI systems to “be human.” This is not true. We are building AI systems to be very good at pretending to be human, and to know humans inside and out. But the way their brains work is very different from ours — as different as any alien brain that might show up from afar. And yet, we continue to push for superintelligence. In fact, 2024 may go down as the year we reach “Peak Human.” By this I mean, the moment in time when AI systems can cognitively outperform more than half of human adults. After we pass that milestone, we will steadily lose our cognitive edge until AI systems can outthink all individual humans — even the most brilliant among us. AI beats one-third of humans on reasoning tasks Until recently, the average human could easily outperform even the most powerful AI systems when it comes to basic reasoning tasks. There are many ways to measure reasoning, none-of-which are considered the gold standard, but the best known is the classic IQ test. Journalist Maxim Lott has been testing all major large language models (LLMs) on a standardized Mensa IQ test. Last week, for the very first time, an AI model significantly exceeded the median human IQ score of 100. The model that crossed the peak of the bell curve was OpenAI’s new “o1” system — it reportedly scored a 120 IQ . So, does this mean AI has exceeded the reasoning ability of most humans? Not so fast. It is not quite valid to administer standard IQ tests to AI systems because the data they trained on likely included the tests (and answers), which is fundamentally unfair. To address this, Lott had a custom IQ test created that does not appear anywhere online and therefore is not in the training data. He gave that “offline test” to OpenAI’s o1 model and it scored an IQ of 95 . This is still an extremely impressive result. That score beats 37% of adults on the reasoning tasks. It also represents a rapid increase, as OpenAI’s previous model GPT-4 (which was just released last year) was outperformed by 98% of adults on the same test. At this rate of progress, it is very likely that an AI model will be able to beat 50% of adult humans on standard IQ tests this year. Does this mean we will reach peak human in 2024? Yes and no. First, I predict yes, at least one foundational AI model will be released in 2024 that can outthink more than 50% of adult humans on pure reasoning tasks. From this perspective, we will exceed my definition for peak human and will be on a downward path towards the rapidly approaching day when an AI is released that can outperform all individual humans, period. Second, I need to point out that we humans have another trick up our sleeves. It’s called collective intelligence, and it relates to the fact that human groups can be smarter than individuals. And we humans have a lot of individuals — more than 8 billion at the moment. I bring this up because my personal focus as an AI researcher over the last decade has been the use of AI to connect groups of humans together into real-time systems that amplify our collective intelligence to superhuman levels. I call this goal collective superintelligence , and I believe it is a viable pathway for keeping humanity cognitively competitive even after AI systems can outperform the reasoning ability of every individual among us. I like to think of this as “peak humanity,” and I am confident we can push it to intelligence levels that will surprise us all. Back in 2019, my research team at Unanimous AI conducted our first experiments in which we enabled groups of people to take IQ tests together by forming real-time systems mediated by Ai algorithms. This first-generation technology called “Swarm AI” enabled small groups of 6 to 10 randomly selected participants (who averaged 100 IQ) to amplify their collective performance to a collective IQ score of 114 when deliberating as an AI facilitated system ( Willcox and Rosenberg ). This was a good start, but not within striking distance of Collective Superintelligence. More recently, we unveiled a new technology called conversational swarm intelligence (CSI). It enables large groups (up to 400 people) to hold real-time conversational deliberations that amplify the group’s collective intelligence. In collaboration with Carnegie Mellon University, we conducted a 2024 study in which groups of 35 randomly selected people were tasked with taking IQ test questions together in real-time as AI-facilitated “ conversational swarms .” As published this year, the groups averaged IQ scores of 128 (the 97th percentile). This is a strong result, but I believe we are just scratching the surface of how smart humans can become when we use AI to think together in far larger groups. I am passionate about pursuing collective superintelligence because it has the potential to greatly amplify humanity’s cognitive abilities, and unlike a digital superintelligence it is inherently instilled with human values, morals, sensibilities and interests. Of course, this begs the question — how long can we stay ahead of the purely digital AI systems? That depends on whether AI continues to advance at an accelerating pace or if we hit a plateau. Either way, amplifying our collective intelligence might help us maintain our edge long enough to figure out how to protect ourselves from being outmatched. When I raise the issue of peak human, many people point out that human intelligence is far more than just the logic and reasoning measured by IQ tests. I fully agree, but when we look at the most “human” of all qualities — creativity and artistry — we see evidence that AI systems are catching up with us just as quickly. It was only a few years ago that virtually all artwork was crafted by humans. A recent analysis estimates that generative AI is producing 15 billion images per year and that rate is accelerating. Even more surprising, a study published just last week showed that AI chatbots can outperform humans on creativity tests. To quote the paper, “the results suggest that AI has reached at least the same level, or even surpassed, the average human’s ability to generate ideas in the most typical test of creative thinking ( AUT ).” I’m not sure I fully believe this result, but it’s just a matter of time before it holds true. Whether we like it or not, our evolutionary position as the smartest and most creative brains on planet earth is likely to be challenged in the near future. We can debate whether this will be a net positive or a net negative for humanity, but either way, we need to be doing more to protect ourselves from being outmatched. Louis Rosenberg, is a computer scientist and entrepreneur in the fields of AI and mixed reality. His new book, Our Next Reality , explores the impact of AI and spatial computing on humanity. DataDecisionMakers Welcome to the VentureBeat community! DataDecisionMakers is where experts, including the technical people doing data work, can share data-related insights and innovation. If you want to read about cutting-edge ideas and up-to-date information, best practices, and the future of data and data tech, join us at DataDecisionMakers. You might even consider contributing an article of your own!

Safe Superintelligence Frequently Asked Questions (FAQ)

When was Safe Superintelligence founded?

Safe Superintelligence was founded in 2024.

Where is Safe Superintelligence's headquarters?

Safe Superintelligence's headquarters is located at San Francisco.

What is Safe Superintelligence's latest funding round?

Safe Superintelligence's latest funding round is Series A.

How much did Safe Superintelligence raise?

Safe Superintelligence raised a total of $1B.

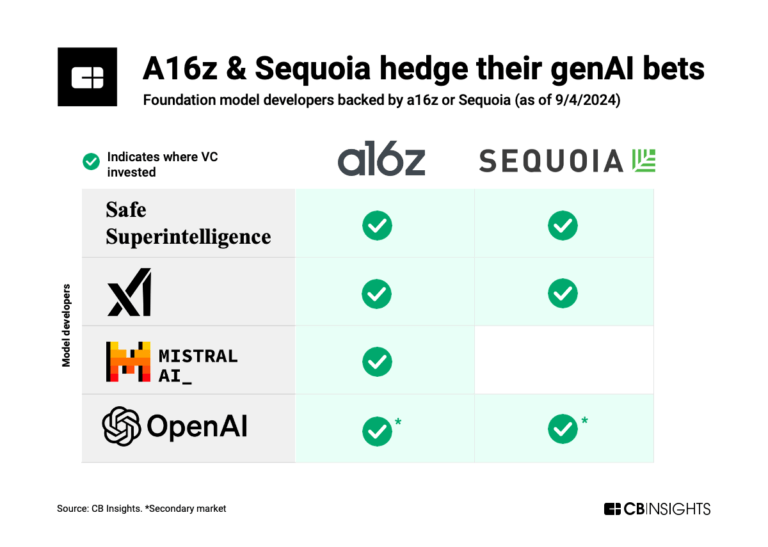

Who are the investors of Safe Superintelligence?

Investors of Safe Superintelligence include SV Angel, Andreessen Horowitz, Nat Friedman and Daniel Gross, Sequoia Capital and DST Global.

Who are Safe Superintelligence's competitors?

Competitors of Safe Superintelligence include Klover.

Loading...

Compare Safe Superintelligence to Competitors

OpenAI is an artificial intelligence (AI) research and deployment company. The company's main offerings include generative models, AI safety research, and an Application Programming Interface (API) platform providing access to their latest AI models. OpenAI primarily serves sectors that require advanced AI technology, such as the technology and creative industries. It was founded in 2015 and is based in San Francisco, California.

xAI focuses on artificial intelligence, specifically in the domain of language learning models. The company's main product, Grok, is designed to answer questions and suggest potential inquiries, functioning as a research assistant that helps users find information online. xAI primarily caters to the AI research community and the general public seeking AI tools for information retrieval and understanding. It was founded in 2023 and is based in Burlingame, California.

Klover offers Artificial General Decision Making™ (AGD™), and is revolutionizing decision-making by humanizing AI. Their proprietary modular library of AI systems slashes development time, enabling rapid prototyping and testing of tailored Ensembles of AI Systems with Multi-Agent Systems Core in Decision Making (EAIS-MASC'd). Klover's holistic approach to AGD™ spans quantum computing, data center and data architecture, software development, and AGD™ research. The company serves individuals and organizations. It was founded in 2023 and is based in San Francisco, California.

Microsoft operates as a technology company with a focus on software development and services in the information technology sector. The company offers a range of products including operating systems, office software, gaming, cloud computing services, and hardware devices. Microsoft primarily serves various sectors including individual consumers, educational institutions, and businesses of all sizes. It was founded in 1975 and is based in Redmond, Washington.

Google operates as a multinational technology company. It specializes in internet-related services and products including, online advertising technologies, search engine technology, cloud computing, computer software, and more. The company provides products such as Google Maps, Google Drive, Google Docs, YouTube, Gmail, and more. It also offers cloud services and other products such as Chromebooks, the Pixel smartphone, and smart home products, which include Nest and Google Home. It was founded in 1998 and is based in Mountain View, California.

Apple focuses on consumer electronics and technology, operating in sectors such as personal computing, mobile communication devices, and digital music. The company offers a range of products including smartphones, personal computers, tablets, wearables, and home entertainment systems, as well as a variety of services like digital content streaming, financial services, and a trade-in program. Apple primarily sells to the consumer market, with a strong presence in the digital content and mobile device industries. Apple was formerly known as Apple Computer, Inc.. It was founded in 1976 and is based in Cupertino, California.

Loading...